Deep learning for fine-grained molecular-based colorectal cancer classification

Highlight box

Key findings

• In this study, a fine-grained classification method for colorectal cancer (CRC) based on the combination of hematoxylin and eosin (H&E)-stained tissue section images and deep learning (DL) techniques was proposed, with an area under the receiver operating characteristic curve (AUC) of 0.791 and an accuracy of 0.524.

What is known and what is new?

• In clinical practice, traditional molecular detection methods for CRC have some drawbacks. In recent years, with the development of interdisciplinary computer and medicine, DL has a great application space in medical image analysis and genomics research.

• A new dataset with gene-level annotations is introduced, containing 383 samples from The First Hospital of Lanzhou University, which compensates for the lack of Asian data in public databases; a model with a Convolutional Neural Network (CNN) as a feature extractor and Vision Transformer (ViT) as a feature aggregator is proposed, and with good results.

What is the implication, and what should change now?

• We validated the possibility of fine-grained CRC classification and proposed a benchmark with relatively good model performance, which provides a reference for molecular diagnosis of CRC and the development of precision medicine. However, due to the limitations of the model and the lack of linkage between hospitals in different regions, the accuracy was not high, and we will continue to optimise the model through collaboration to expand the dataset.

Introduction

Colorectal cancer (CRC) is one of the most common malignant tumors worldwide and a leading cause of cancer-related mortality (1). Among 36 cancers in 185 countries, CRC ranks fourth in terms of incidence and third in terms of mortality (2). This highlights the urgent need for personalized treatment plans and accurate prognostic assessments.

According to the Version 1.2024, National Comprehensive Cancer Network (NCCN) Colorectal Guidelines (3), microsatellite instability (MSI) and the presence of BRAF, KRAS, and NRAS mutations are recommended as routine molecular markers for testing in all patients diagnosed with CRC. In clinical practice, molecular testing is primarily conducted using polymerase chain reaction (PCR) (4) or next-generation sequencing (NGS) (5). Tissue sections stained with hematoxylin and eosin (H&E) contain a wealth of useful information for routine histopathological analysis. It is the foundation of pathological diagnosis. Whole slide image (WSI) can convert hematoxylin and eosin (H&E)-stained tissue sections into high-resolution digital images known as H&E staining WSIs, opening up the possibility of applying artificial intelligence (AI) to pathology (6,7).

In CRC, the patients with MSI status typically exhibit a superior prognosis and a higher survival rate clinically, and is associated with adjuvant chemotherapy and prediction of response to immune checkpoint inhibition (8). These patients are more responsive to immunotherapy, including programmed cell death protein 1 (PD-1) inhibitors, and can be treated with neoadjuvant immunotherapy alone or in combination with other therapeutic modalities (9,10). Supported by deep learning (DL), in 2019, Kather et al. proposed for the first time that deep residual learning can predict MSI status directly from H&E histology (11). Research by Echle et al. trained a DL detector to recognize MSI states (12). In 2023, Wagner et al. developed a new transformer-based pipeline for predicting MSI status with even clinical-grade performance (13). Chang et al. in 2023 achieved an area under the receiver operating characteristic curve (AUC) of 0.954 using the Convolutional Neural Network (CNN) model INSIGHT combined with the self-attention model MSI (14).

However, with the development of precision medicine, personalized cancer treatment requires accurate biomarker assessment, and combining DL with it is crucial for improving health equity and promoting the development of this field (15). Mutations in BRAF, KRAS and NRAS, which are downstream targets of the epidermal growth factor receptor (EGFR) signalling pathway, have been linked to resistance to EGFR-targeted therapies in CRC, diminishing the effectiveness of drugs like cetuximab and panitumumab (16,17). AI technology based on DL has shown promising potential in the area of molecular detection. For example, in 2024, Kim et al. proposed the identification of BRAF V600E and RAS-like alterations in non-invasive follicular thyroid tumors with nuclear features (18). The study by Jiang et al. also showed good results for predicting KRAS mutations in CRC (19). In addition, Li’s research showed that DL can distinguish between BRAF, KRAS and NRAS genotypes in left-sided CRC (20). Overall, DL-based AI technology shows valuable potential in the field of genetic testing for malignant tumor. And with the advantages of cost-effectiveness and high efficiency, it could enable widespread application.

In clinical practice, conventional molecular detection methods for MSI status, BRAF, KRAS, and NRAS face several challenges, including technical complexity, the need for specialized molecular laboratories and trained personnel, long processing times, and high costs, etc. In this study, using H&E-stained tissue sections WSIs obtained from formalin-fixed paraffin-embedded (FFPE) histological specimens as raw data, we propose a hybrid DL method combining CNN and Vision Transformer (ViT) for fine-grained classification tasks. The bias of the model among different clinical characteristic groups, such as gender, age, and tumor location, was also analyzed to verify the wide adaptability of the model. For the exploration of a less costly and time-consuming technique to provide new diagnostic ideas and solutions for molecular diagnosis of CRC. We present this article in accordance with the TRIPOD reporting checklist (available at https://tcr.amegroups.com/article/view/10.21037/tcr-2024-2348/rc).

Methods

Patient cohorts and ethics statement

We retrospectively collected data of 417 cases data from patients with CRC who underwent molecular pathology testing at The First Hospital of Lanzhou University (LZUFH) from January 1, 2021 to January 1, 2024. Among these patients, only those who met the following criteria were included: (I) underwent a complete molecular pathology examination; (II) had available H&E-stained tissue sections. Finally, we collected 383 cases to generate the LZUFH_CRC dataset for fine-grained molecular-based CRC classification (Figure 1A). The study was conducted in accordance with the Declaration of Helsinki and its subsequent amendments. The study was approved by the Ethics Committee of LZUFH (No. LDYYLL-2024-740) and the requirement for individual consent for this retrospective analysis was waived.

LZUFH_CRC dataset preparation

The relevant label of molecular type for each patient was obtained from the Molecular Pathology Laboratory of the Pathology Department at LZUFH. The specific classification composition of the dataset is shown in Figure 1B. The label was generated using the same sequencing strategy: selecting FFPE samples with clear molecular pathological diagnosis results, and performing real-time fluorescence quantitative PCR (qPCR). The diagnostic criteria strictly followed the Version 2.2021, NCCN Clinical Practice Guidelines in Oncology.

H&E staining is the most commonly used histopathological staining method in clinical practice. Meanwhile, to ensure the diversity of tissue staining of samples in the dataset, the H&E sections in this study were stained by different pathological technicians. The original WSI were scanned by the Wisleap WS-10 Digital Whole Slide Scanner [Wisleap Medical Technology (Jiangsu) Co. Ltd., Changzhou, China] at a resolution of 0.23 µm/pixel, i.e., 40× magnification. Tumor regions were manually marked in the original WSI (Figure 1C) by a professional pathologist with a deputy senior professional title or above using Labelme labelling software and an annotated mask was generated. And it was verified and optimized by another pathologist with a deputy senior professional title or above, so as to ensure the accuracy (ACC) and consistency of the labeled areas (Figure 1D) (21).

The Cancer Genome Atlas (TCGA) dataset

TCGA dataset contains diagnostic sections from CRC patients in SVS format, downloaded from Genomic Data Commons (GDC) (https://gdc.cancer.gov). In this study, the dataset was referred to as TCGA_CRC.

Image processing process

Theoretically, the higher the resolution, the more it aids in the classification of pathological images. However, this also implies a greater demand for storage space and computational resources. After comprehensively considering the relationship between the above two aspects we opted for a trade-off-based 20× WSI.

We used the NVIDIA RTX3090 NVIDIA RTX3090 (NVIDIA Corporation, Santa Clara, CA, USA) to sliced the 20× WSIs to generate 224×224 patches without overlap between neighboring patches for fine-tuning the feature extractor of the DL model. The patches were classified as belonging to either the cancer subtype of the WSI or as normal based on a template of cancerous regions labeled by a pathologist.

For the whole WSI images for training and inference, we utilized an automatic image processing. Since the size of the 20× WSI image was too large, we utilized the 5× WSI for annotating the foreground contour and performed a multiplicative conversion when using the contour. The 5× WSI was used to automatically determine a suitable threshold using Otsu binarization (22) on the WSI image in Hue-Saturation-Value (HSV) space. We eliminated small gaps through close operation to better preserve the integrity of the foreground. Finally, the Douglas-Peucker polygon approximation method (23) was used to the smooth contour and resulted in concise and accurate foreground area. To avoid potential adverse effects from factors such as staining variability during model training, Macenko color normalization (24) was applied to the WSIs.

Model structure

Our model consists of an extractor, an aggregator, and a classification head (Figure 2). We utilized a two-stage training strategy because the size of WSI is too large for the limited computational resources. The feature extractor was trained for the first stage. The aggregator and classification head were trained during the second stage.

The ResNet (25) was chosen as the backbone of the feature extractor, which is primarily pre-trained on a natural image dataset, i.e., the ImageNet (26). To reduce the diversity gap between natural image and medical image, the ResNet was finetuned with the LZUFH_CRC dataset by a patch-level classification. The inputted WSI image was divided into patches. By feeding patches from an individual WSI into the fine-tuned ResNet, a long feature sequence was achieved.

The ViT (26) was used for aggregating the feature by adding a Cos positional encoding (26-28). Inspired by Lu’s method (29), we highlighted the crucial patches that achieves effective results, selected some important patch feature and input them to the ViT module. Specifically, we calculated the attention matrix for each patch and averaged the attention scores to sort the patch feature. Alongside the selected sequence, we also used the complete sequence to maintain global features. Since the complete sequence is long and difficult for standard attention computation, we employed Nystrom attention (30), which approximates the attention matrix and reduces computational overhead.

Finally, we concatenated the outputs from both attention branches and passed them through a multi-layer perceptron (MLP) classification head, and predict the fine-grained subtypes based on the maximum logit value. For the training phase, we used a contrastive loss as a regular term to the standard cross-entropy loss. We applied global average pooling to the features from both ViT modules and the contrastive loss minimizes their similarity (31) as they come from the same WSI image.

Implementation details

For the feature extractor, we used the common ResNet-18 model. The Adam optimizer was used with a learning rate of 1e−5, weight decay of 1e−5. The batch size was set to 512 and the feature extractor was trained for 50 epochs. For the aggregator, the standard ViT module was used (28). The output feature dimension of ViT was set to 512, and this size was maintained throughout the remaining processes. Final classification was performed by using an MLP with two hidden layers of dimensions 1,024 and 256, respectively. The Adam optimizer was used for the aggregator and classification head with a learning rate of 1e−4 and a weight decay of 1e−5. The weight of contrastive loss was 0.4. Due to the varying length of patch sequence of the WSI images, we processed one image per batch and train the model for 50 epochs.

Results

Evaluation of the model’s performance

We assessed the reliability of the results by comparing with the molecular annotations from pathologists. The commonly used metrics in machine learning, i.e., ACC, AUC, precision, recall and F1-score are used for evaluation (13).

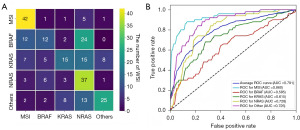

The proposed fine-grained molecular-based CRC classification model was first evaluated on the test set of LZUFH_CRC. Our model achieved overall ACC of 0.524, as shown in Table 1. From Figure 3A and Table 1, MSI and NRAS were well classified, achieving the F1-score of 0.724 and 0.514, respectively. The results of BRAF and KRAS were undesirable, with their F1-scores being 0.316 and 0.380, respectively. With a series of thresholds for classification, the receiver operating characteristic (ROC) curve was illustrated in Figure 3B. The averages AUC is 0.791.

Table 1

| Type | ACC | Precision | Recall | F1-score | AUC |

|---|---|---|---|---|---|

| MSI | – | 0.636 | 0.84 | 0.724 | 0.86 |

| BRAF | – | 0.462 | 0.24 | 0.316 | 0.585 |

| KRAS | – | 0.517 | 0.3 | 0.380 | 0.615 |

| NRAS | – | 0.394 | 0.74 | 0.514 | 0.728 |

| Other | – | 0.714 | 0.5 | 0.588 | 0.725 |

| Averages | 0.524 | 0.545 | 0.524 | 0.504 | 0.791 |

ACC, accuracy; AUC, area under the receiver operating characteristic curve; CRC, colorectal cancer; H&E, hematoxylin and eosin; LZUFH_CRC dataset, a dataset constructed using H&E-stained tissue section images of 383 CRC patients from The First Hospital of Lanzhou University; MSI, microsatellite instability.

Visualization of the feature activation map of the proposed model

To better know which region was paid more attention by the proposed DL model, we visualized the feature activation map for different inputted WSI images, as shown in Figure 4. The scores indicated different levels of attention for the WSI patches, 1 is the highest, while 0 is the lowest. Scores closer to 1 indicate that the model is more likely to learn the features of the region during the learning process, which demonstrates that the DL model can automatically determine which regions in the raw data are more important and is able to accurately identify their features.

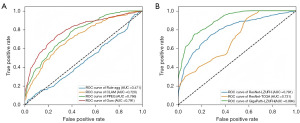

Comparison with different methods

With the feature extractor of ResNet (25), we evaluated the performance of three state-of-the-art models on the LZUFH_CRC dataset. As shown in Table 2 and Figure 4, Rule-agg (12), Clustering-constrained Attention Multiple Instance Learning (CLAM) (29), and Pyramid Position Encoding Generator (PPEG) (32) achieved the ACC of 0.212, 0.413, and 0.453 respectively. We achieved a significant performance improvement to 0.524, highlighting the potential of our model for accurate classification tasks. The F1-score and AUC were also significantly better than those of existed methods (Figure 5A).

Table 2

| Models | Extractor | Dataset | ACC | Precision | Recall | F1-score | AUC |

|---|---|---|---|---|---|---|---|

| Rule-agg (12) | Resnet (25) | LZUFH_CRC | 0.212 | 0.211 | 0.208 | 0.216 | 0.471 |

| CLAM (29) | Resnet (25) | LZUFH_CRC | 0.413 | 0.424 | 0.413 | 0.393 | 0.72 |

| PPEG (32) | Resnet (25) | LZUFH_CRC | 0.453 | 0.471 | 0.453 | 0.452 | 0.766 |

| Ours | Resnet (25) | LZUFH_CRC | 0.524 | 0.545 | 0.524 | 0.504 | 0.791 |

| TCGA_CRC | 0.566 | 0.422 | 0.566 | 0.47 | 0.731 | ||

| GigaPath (33) | LZUFH_CRC | 0.628 | 0.623 | 0.628 | 0.620 | 0.894 |

ACC, accuracy; AUC, area under the receiver operating characteristic curve; CLAM, Clustering-constrained Attention Multiple Instance Learning; CRC, colorectal cancer; H&E, hematoxylin and eosin; LZUFH_CRC dataset, a dataset constructed using H&E-stained tissue section images of 383 CRC patients from The First Hospital of Lanzhou University; Ours, our proposed method and default settings in this study; PPEG, Pyramid Position Encoding Generator; TCGA, The Cancer Genome Atlas.

To verified the generalization of our model, that is, the ability to accurately predict data from different sources, we also evaluated it on the TCGA_CRC dataset. It obtained higher ACC while lower F1-score and AUC. Furthermore, we explored fine-grained classification with stronger feature extractor. The GigaPath (33) was used as it is a pre-trained large model for medial image processing. As shown in Figure 5B and the last line of Table 2, stronger feature extractor enabled our model to achieve an increased ACC of 0.628, the F1-score of 0.620, and the AUC of 0.894.

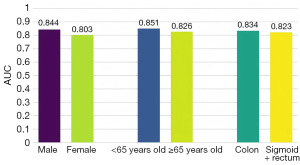

Impact of different clinical groupings on model performance

To investigate whether our model’s performance was influenced by various clinical groupings, we stratified the dataset based on gender, age, and tumor location, and analyzed the AUC for each group, as shown in Figure 6. The ratio of the male sample was 0.6 and the ratio of the female samples was 0.4, which basically conforms to population distribution of China. The AUC were similar that it is 0.844 and 0.803 for male and female, respectively. It demonstrated that our model was gender-agnostic.

The similar phenomenon was observed on age and the tumor location. It suggested that our model does not exhibit obvious bias in its learning process.

Ablation experiments

We conducted four groups of ablation experiments to evaluate the impact of each component on the overall performance of the model. As shown in Table 3, the last row contains all the hyperparameters we finally used.

Table 3

| Group | Positional embedding | Top-k | Contrastive loss | Output feature | ACC | AUC |

|---|---|---|---|---|---|---|

| 1 | – | 0.2 | 0.4 | Pooling | 0.464 | 0.776 |

| 2 | Cos | 0 | – | Pooling | 0.492 | 0.779 |

| Cos | 0.1 | 0.4 | Pooling | 0.504 | 0.787 | |

| Cos | 0.3 | 0.4 | Pooling | 0.492 | 0.784 | |

| 3 | Cos | 0.2 | 0 | Pooling | 0.508 | 0.783 |

| Cos | 0.2 | 0.2 | Pooling | 0.52 | 0.791 | |

| Cos | 0.2 | 0.6 | Pooling | 0.508 | 0.79 | |

| Cos | 0.2 | 0.8 | Pooling | 0.488 | 0.788 | |

| Cos | 0.2 | 1 | Pooling | 0.2 | 0.5 | |

| 4 | Cos | 0.2 | 0.4 | CLS | 0.496 | 0.78 |

| Ours | Cos | 0.2 | 0.4 | Pooling | 0.524 | 0.791 |

Group 1, whether to use the most commonly used Cos positional embedding; Group 2, choosing different ratios of key focusing sequences; Group 3, the choice of different amounts of contrast loss while maintaining the optimal ratio of 0.2; Group 4, different methods used when obtaining aggregator output features. ACC, accuracy; AUC, area under the receiver operating characteristic curve; CLS, classification token; Cos, cosine; Ours, our proposed method and default settings in this study; Top-k, the key sequence.

Firstly, since our model was integrated with ViT, we tested whether to use the most commonly used Cos Positional Embedding. According to the results of the first group of experiments, the performance of the experiments using positional encoding was significantly better than those without it.

Secondly, the contrastive loss between the complete sequence and the key sequence (Top-k) can only be used when the key sequence is employed. Therefore, this loss term cannot be used without the key sequence. In the second group of experiments, we selected the key-focused sequences according to different proportions. As the results show, a proportion of 0.2 was slightly better than the proportions of 0.1 and 0.3.

In the third group of experiments, while maintaining the optimal proportion of 0.2, we experimented with different amounts of contrastive loss. The results showed that using this loss term contributed to performance improvement.

Finally, when obtaining the output feature, as shown in the results of the fourth group of experiments, our experiments using pooling outperformed those using the classification token (CLS).

Discussion

CRC is one of the malignancies with the highest morbidity and mortality worldwide. Precision medicine can develop personalized treatment plans based on each patient’s genetic profile and the molecular characteristics of their tumor. This approach can improve the survival rate and quality of life of patients (34,35). Accurate molecular testing can provide a wealth of information for precision medicine (34). In CRC, molecular testing helps physicians identify mutations in patients’ BRAF, KRAS and NRAS. This identification allows for the administration of targeted therapies that can significantly enhance treatment effectiveness, prolong progression-free survival, and improve overall survival (36,37), and can also determine the MSI status, guiding the use of immunotherapy where appropriate (38). However, traditional molecular detection methods have many drawbacks, so achieving accurate and efficient molecular detection remains a key challenge (37).

In recent years, AI and DL technologies have achieved significant advances in medical image analysis and genomics research. Since Kather et al. first proposed the application of CNN to predict the MSI status of CRC in H&E-stained sections in 2019, research in this area has progressed substantially (11). Recently, Chang et al. achieved an AUC of 0.954 in the diagnosis of MSI status in CRC using the CNN model INSIGHT in combination with the self-attention model (14). The research by Li et al. demonstrated that DL could discriminate between BRAF, KRAS and NRAS genotypes in left-sided CRC (20). However, these methods still have shortcomings that all existing studies have only focused on classifying MSI status for immunotherapy alone or BRAF, KRAS and NRAS classification for targeted therapy alone. It is still not suitable for precision medicine by administer appropriate targeted drugs guided by identify mutations genes.

This study aims to improve the ACC and efficiency of fine-grained molecular detection for CRC by introducing a new dataset with gene-level annotation and optimizing DL models to provide more reliable technical support for precision. It not only helps promote the development of molecular detection technology for CRC, but also provides reference for precision medicine in other types of cancer.

The dataset used in this paper is from LZUFH, and after screening, a total of 383 patients’ H&E section information was included in the dataset. Unlike the TCGA dataset is collected in the US, the proposed dataset mainly uses cases from the northwestern region of China, which compensates for the lack of Asian-related data in public databases. Meanwhile, this study investigated whether the model was biased in the decision-making process by grouping patients by gender, age and tumor location, so as to investigate the ACC and reliability of the model.

Using LZUFH_CRC as an internal dataset, we proposed a model for the fine-grained classification of CRC, which is composed of three parts, a CNN as a feature extractor, a ViT as a feature aggregator, and a MLP as a classification head. It effectively achieved the accurate prediction of the five classified molecular phenotypes from H&E-stained pathological sections. The model performed well on the LZUFH_CRC dataset, achieving an AUC of 0.791. It is also tested with external dataset, i.e., the TCGA dataset, for comprehensive evaluation, and achieved an AUC of 0.731. The result showed that our model performed as well as the result on the internal LZUFH_CRC dataset. It demonstrated that our model was able to learn the nuances between different molecular subtypes of CRC from H&E sections and had good performance in multi-category classification tasks.

In our study, we also innovatively applied GigaPath as the extractor, a large pre-trained model for medical imaging, which was proposed in Nature by Xu et al. in 2024 (33). It further achieved an AUC of 0.894, demonstrating that our model can be adapted to different extractors with stability and reliability. We also observed an interesting phenomenon that in actual clinical work, pathologists usually focused more on the parenchymal part of the tumor to diagnose tumor diseases, but the DL model in this study focused more on the transition region between tumor and non-tumor regions as well as the mesenchymal region of the tumor, which might indicate that we could focus more on these regions in our clinical diagnostic work to improve the diagnostic ACC. Finally, for the grouping of different clinical characteristics, such as different gender, age and site of onset, the analysis of the data showed that there was no significant difference between the groups. It was in line with the actual clinical diagnosis, indicating that our model was not affected by these factors and had no obvious bias, providing a more solid foundation for future application in the clinic.

Although we validated the possibility of fine-grained CRC classification and presented a benchmark and demonstrated relatively excellent model performance. However, the ACC was still relatively low, which might be related to the limitations of the current development of AI models and the lack of connection between hospitals, making it difficult to obtain sufficient sample sizes.

In future research, we will collaborate with medical institutions in other regions to expand the existing dataset into a comprehensive data resource covering multiple centers and regions. Meanwhile, we will continue to explore transfer learning or parameter fine-tuning to improve the performance of the model, and develop deep-learning-based detection methods for the molecular typing of CRC at the clinical level.

Conclusions

In this study, we established a multi-classification fine-grained benchmark for molecular typing prediction based on pathological H&E images, which reduced the cost and time of detection compared with traditional molecular detection methods. Meanwhile, by combing CNN and ViT for model design, it had a great improvement over other models and had strong practical and promotional value. This study could also be used as a reference for pathological image analysis of other types of cancer to promote the development of the field of cancer precision medicine as well as the innovation of computer-aided diagnosis.

Acknowledgments

None.

Footnote

Reporting Checklist: The authors have completed the TRIPOD reporting checklist. Available at https://tcr.amegroups.com/article/view/10.21037/tcr-2024-2348/rc

Data Sharing Statement: Available at https://tcr.amegroups.com/article/view/10.21037/tcr-2024-2348/dss

Peer Review File: Available at https://tcr.amegroups.com/article/view/10.21037/tcr-2024-2348/prf

Funding: This study was supported by

Conflicts of Interest: All authors have completed the ICMJE uniform disclosure form (available at https://tcr.amegroups.com/article/view/10.21037/tcr-2024-2348/coif). The authors have no conflicts of interest to declare.

Ethical Statement: The authors are accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved. The study was conducted in accordance with the Declaration of Helsinki and its subsequent amendments. The study was approved by the Ethics Committee of The First Hospital of Lanzhou University (No. LDYYLL-2024-740) and the requirement for individual consent for this retrospective analysis was waived.

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

References

- Sung H, Ferlay J, Siegel RL, et al. Global Cancer Statistics 2020: GLOBOCAN Estimates of Incidence and Mortality Worldwide for 36 Cancers in 185 Countries. CA Cancer J Clin 2021;71:209-49. [Crossref] [PubMed]

- Bray F, Laversanne M, Sung H, et al. Global cancer statistics 2022: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA Cancer J Clin 2024;74:229-63. [Crossref] [PubMed]

- Ness RM, Llor X, Abbass MA, et al. NCCN Guidelines® Insights: Colorectal Cancer Screening, Version 1.2024. J Natl Compr Canc Netw 2024;22:438-46. [Crossref] [PubMed]

- Pritchard CC, Grady WM. Colorectal cancer molecular biology moves into clinical practice. Gut 2011;60:116-29. [Crossref] [PubMed]

- Yaeger R, Chatila WK, Lipsyc MD, et al. Clinical Sequencing Defines the Genomic Landscape of Metastatic Colorectal Cancer. Cancer Cell 2018;33:125-136.e3. [Crossref] [PubMed]

- Kumar N, Gupta R, Gupta S. Whole Slide Imaging (WSI) in Pathology: Current Perspectives and Future Directions. J Digit Imaging 2020;33:1034-40. [Crossref] [PubMed]

- El Nahhas OSM, van Treeck M, Wölflein G, et al. From whole-slide image to biomarker prediction: end-to-end weakly supervised deep learning in computational pathology. Nat Protoc 2025;20:293-316. [Crossref] [PubMed]

- Boland CR, Goel A. Microsatellite instability in colorectal cancer. Gastroenterology 2010;138:2073-2087.e3. [Crossref] [PubMed]

- Casak SJ, Marcus L, Fashoyin-Aje L, et al. FDA Approval Summary: Pembrolizumab for the First-line Treatment of Patients with MSI-H/dMMR Advanced Unresectable or Metastatic Colorectal Carcinoma. Clin Cancer Res 2021;27:4680-4. [Crossref] [PubMed]

- Van Cutsem E, Köhne CH, Láng I, et al. Cetuximab plus irinotecan, fluorouracil, and leucovorin as first-line treatment for metastatic colorectal cancer: updated analysis of overall survival according to tumor KRAS and BRAF mutation status. J Clin Oncol 2011;29:2011-9. [Crossref] [PubMed]

- Kather JN, Krisam J, Charoentong P, et al. Predicting survival from colorectal cancer histology slides using deep learning: A retrospective multicenter study. PLoS Med 2019;16:e1002730. [Crossref] [PubMed]

- Echle A, Grabsch HI, Quirke P, et al. Clinical-Grade Detection of Microsatellite Instability in Colorectal Tumors by Deep Learning. Gastroenterology 2020;159:1406-1416.e11. [Crossref] [PubMed]

- Wagner SJ, Reisenbüchler D, West NP, et al. Transformer-based biomarker prediction from colorectal cancer histology: A large-scale multicentric study. Cancer Cell 2023;41:1650-1661.e4. [Crossref] [PubMed]

- Chang X, Wang J, Zhang G, et al. Predicting colorectal cancer microsatellite instability with a self-attention-enabled convolutional neural network. Cell Rep Med 2023;4:100914. [Crossref] [PubMed]

- Williams DKA Jr, Graifman G, Hussain N, et al. Digital pathology, deep learning, and cancer: a narrative review. Transl Cancer Res 2024;13:2544-60. [Crossref] [PubMed]

- Adebayo AS, Agbaje K, Adesina SK, et al. Colorectal Cancer: Disease Process, Current Treatment Options, and Future Perspectives. Pharmaceutics 2023;15:2620. [Crossref] [PubMed]

- De Roock W, Claes B, Bernasconi D, et al. Effects of KRAS, BRAF, NRAS, and PIK3CA mutations on the efficacy of cetuximab plus chemotherapy in chemotherapy-refractory metastatic colorectal cancer: a retrospective consortium analysis. Lancet Oncol 2010;11:753-62. [Crossref] [PubMed]

- Kim C, Agarwal S, Bychkov A, et al. Differentiating BRAF V600E- and RAS-like alterations in encapsulated follicular patterned tumors through histologic features: a validation study. Virchows Arch 2024;484:645-56. [Crossref] [PubMed]

- Jiang Y, Chan CKW, Chan RCK, et al. Identification of Tissue Types and Gene Mutations From Histopathology Images for Advancing Colorectal Cancer Biology. IEEE Open J Eng Med Biol 2022;3:115-23. [Crossref] [PubMed]

- Li X, Chi X, Huang P, et al. Deep neural network for the prediction of KRAS, NRAS, and BRAF genotypes in left-sided colorectal cancer based on histopathologic images. Comput Med Imaging Graph 2024;115:102384. [Crossref] [PubMed]

- Russell BC, Torralba A, Murphy KP, et al. LabelMe: a database and web-based tool for image annotation. International Journal of Computer Vision 2008;77:157-73. [Crossref]

- Otsu N. A threshold selection method from gray-level histograms. Automatica 1975;11:23-27.

- Douglas DH, Peucker TK. Algorithms for the reduction of the number of points required to represent a digitized line or its caricature. Cartographica The International Journal for Geographic Information and Geovisualization 1973;10:112-22. [Crossref]

- Macenko M, Niethammer M, Marron JS, et al. A method for normalizing histology slides for quantitative analysis. 2009 IEEE International Symposium on Biomedical Imaging: From Nano to Macro; 2009:1107-10.

- He K, Zhang X, Ren S et al. Deep residual learning for image recognition. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; 2016:770-8.

- Deng J, Dong W, Socher R, et al. Imagenet: A large-scale hierarchical image database. IEEE Conference on Computer Vision and Pattern Recognition; 2009:248-55.

- Dosovitskiy A, Beyer L, Kolesnikov A et al. An image is worth 16x16 words: transformers for image recognition at scale. Proceedings of the International Conference on Learning Representations (ICLR); 2021.

- Vaswani A, Shazeer N, Parmar N, et al. Attention is all you need. 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA. 2017.

- Lu MY, Williamson DFK, Chen TY, et al. Data-efficient and weakly supervised computational pathology on whole-slide images. Nat Biomed Eng 2021;5:555-70. [Crossref] [PubMed]

- Xiong Y, Zeng Z, Chakraborty R, et al. Nyströmformer: Anyström-based algorithm for approximating self-attention. Proceedings of the AAAI Conference on Artificial Intelligence 2021;35:14138-48. [Crossref] [PubMed]

- Hadsell R, Chopra S, LeCun Y. Dimensionality reduction by learning an invariant mapping. 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR'06); 17-22 June 2006; New York, NY, USA. IEEE; 2006;2:1735-42.

- Shao Z, Bian H, Chen Y, et al. Transmil: Transformer based correlated multiple instance learning for whole slide image classification. Advances in Neural Information Processing Systems 2021;34:2136-47.

- Xu H, Usuyama N, Bagga J, et al. A whole-slide foundation model for digital pathology from real-world data. Nature 2024;630:181-8. [Crossref] [PubMed]

- Dienstmann R, Vermeulen L, Guinney J, et al. Consensus molecular subtypes and the evolution of precision medicine in colorectal cancer. Nat Rev Cancer 2017;17:268. [Crossref] [PubMed]

- Li H, Guo L, Wang C, et al. Improving the value of molecular testing: current status and opportunities in colorectal cancer precision medicine. Cancer Biol Med 2023;21:21-8. [Crossref] [PubMed]

- Ambrosini M, Tougeron D, Modest D, et al. BRAF + EGFR +/- MEK inhibitors after immune checkpoint inhibitors in BRAF V600E mutated and deficient mismatch repair or microsatellite instability high metastatic colorectal cancer. Eur J Cancer 2024;210:114290. [Crossref] [PubMed]

- Comprehensive molecular characterization of human colon and rectal cancer. Nature 2012;487:330-7. [Crossref] [PubMed]

- Le DT, Durham JN, Smith KN, et al. Mismatch repair deficiency predicts response of solid tumors to PD-1 blockade. Science 2017;357:409-13. [Crossref] [PubMed]